According to The Verge, Anthropic is launching a one-week pilot research program where an AI will conduct 10 to 15 minute interviews with users about their experiences with AI. The AI interviewer will ask questions like what users would most ideally want AI’s help with and whether they see potential developments that conflict with their values. This initiative is being run by Anthropic’s societal impacts team as part of a push to do more social science research on AI’s effects. The AI itself even acknowledges the oddity, telling participants that “AI asking about AI [is a] bit self-referential.” The immediate outcome is a limited data-gathering exercise, but the broader goal is to inform Anthropic’s development and deployment strategies.

The Meta Loop

So here’s the thing. Using an AI to research how people feel about AI is incredibly meta. It’s like a snake trying to study its own venom by asking mice what the bite felt like. Anthropic is basically creating a feedback loop where the tool becomes the researcher of its own impact. I think the big question is: can you get honest, nuanced human feedback through an interface that is, itself, the subject of that feedback? There’s a built-in bias. People might tailor their responses knowing they’re talking to the very technology they’re critiquing, or they might anthropomorphize the interviewer and be less critical. It’s a fascinating, if flawed, experiment in reflexive research.

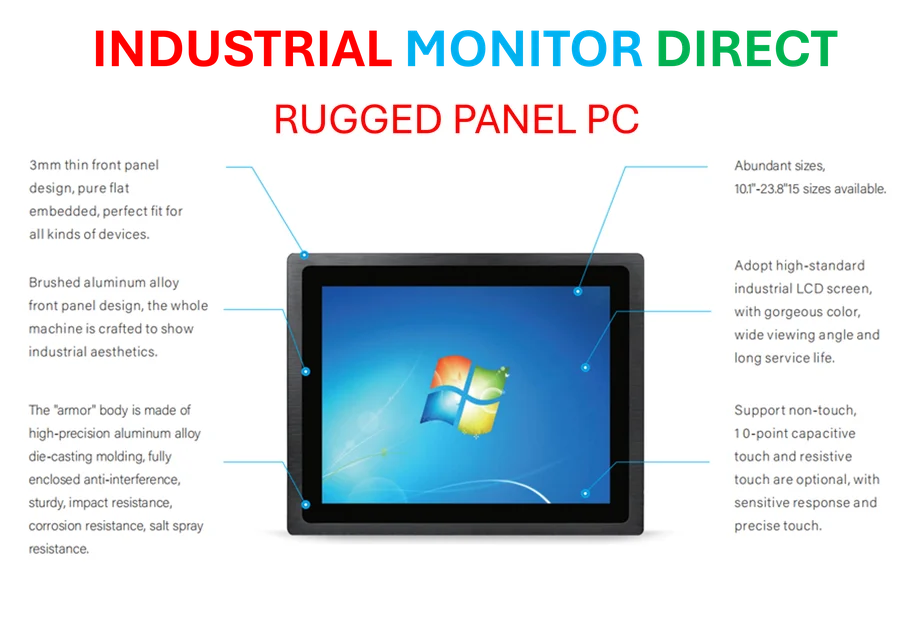

How It Probably Works

Technically, this isn’t some magical new model. It’s almost certainly a carefully constrained and scripted application of Claude, Anthropic’s own AI. The interview is likely a structured conversation flow—a fancy, dynamic survey—where the AI follows a pre-defined research protocol but uses its natural language ability to ask follow-ups or clarify answers. The challenge for Anthropic’s team is balancing consistency with openness. They need every participant to hit on the core research questions for comparable data, but they also want the flexibility to explore unexpected insights. That’s a tough trade-off. Too rigid, and you lose the “interview” advantage over a static form. Too loose, and your data becomes messy and hard to analyze.

The Bigger Picture

Look, this pilot is small—just a week—but it signals where AI ethics and safety research is headed. Companies can’t just build in a vacuum anymore; there’s pressure to demonstrate they’re listening. And what’s more performative than having your AI directly ask for its own report card? But skepticism is healthy. Is this genuine social science or a PR move wrapped in research? The proof will be in what Anthropic does with the findings. Do they actually change course based on user “visions and values,” or does this just become a footnote in a model card? It’s a step towards more interactive research, but the self-referential elephant in the room is huge. Basically, we’re watching a tool try to understand its own shadow.