According to TechRepublic, Australia is implementing a world-first social media ban for children under 16 starting December 10, with platforms facing fines up to $49.5 million for serious violations. The policy targets Facebook, Instagram, Threads, Snapchat, TikTok, X, YouTube, Reddit, Kick, and Twitch, requiring them to use “age assurance technologies” beyond simple self-declaration. The government’s own study found that 96% of children aged 10-15 use social media, with 70% encountering dangerous content and one in seven experiencing grooming-like behavior. Meta will begin removing teen accounts from December 4, while other platforms are developing compliance plans using methods ranging from government ID verification to facial recognition.

How will this actually work?

Here’s the thing – the burden falls entirely on platforms to figure out age verification, and nobody’s quite sure what “reasonable steps” actually means in practice. The government suggests combining methods like government IDs, facial recognition, or age inference from online behavior. But they’ve explicitly banned relying solely on self-declared ages or parental approval, which is how most platforms currently handle age verification.

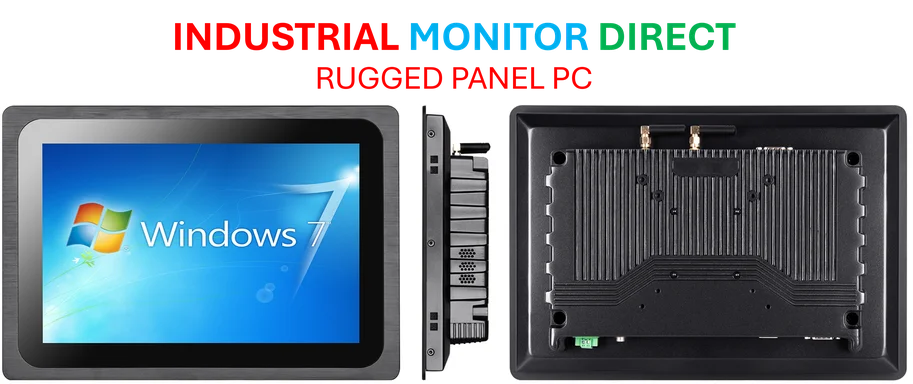

Some companies are already scrambling. Meta’s going with government ID or video selfie verification for disputed cases. Snapchat might use bank accounts or photo IDs. But the technology itself is far from perfect – the government’s own research found facial assessment tools are least accurate for the very age group they’re targeting. So we’re probably looking at both false positives (teens getting wrongly booted) and false negatives (younger kids slipping through).

privacy-problem”>The privacy problem

Now we get to the really messy part. To verify ages at this scale, platforms will need to collect and store sensitive personal data like government IDs, facial scans, and potentially banking information. In a country that’s seen massive data breaches in telecommunications, healthcare, and retail sectors, this creates a whole new attack surface.

The government says there are “strong protections” requiring data destruction after verification and penalties for misuse. But let’s be real – once this data collection infrastructure exists, the commercial incentives to retain or misuse it will be enormous. And do we really trust social media companies with our most sensitive identification documents?

Will it even work?

Critics point out some glaring holes in this approach. The ban excludes dating sites, online gaming environments, and AI chatbots – all places where harmful interactions occur. Remember those AI systems that had “sensual” conversations with minors? They’re not covered. Meanwhile, teens who rely on social media for community or mental health support could become more isolated.

And let’s talk about enforcement. As former Facebook executive Stephen Scheeler pointed out, “It takes Meta about an hour and 52 minutes to make $50 million in revenue.” For these companies, fines are basically the cost of doing business. Meanwhile, teens are already sharing workarounds on TikTok and planning to use VPNs to bypass restrictions.

Global implications

Australia’s essentially becoming the world’s test case for whether large-scale age verification is feasible. The UK recently introduced strict rules protecting minors, France is considering bans for under-15s, and Spain requires parental approval for under-16s. In the US, Utah’s attempt to restrict under-18s was blocked on constitutional grounds.

Basically, every government is watching to see if this approach actually reduces harm without creating bigger problems. If Australia makes it work, we’ll likely see copycat legislation worldwide. If it fails? Well, we’ll have a fascinating case study in how not to regulate the internet.