According to Windows Report | Error-free Tech Life, Microsoft has signed a massive $9.7 billion deal with data center operator IREN, giving the tech giant access to NVIDIA’s cutting-edge GB300 chips through a five-year agreement. The deal provides Microsoft with access to nearly 3,000 megawatts of total capacity across IREN’s North American data centers, with deployment beginning at the 750-megawatt Childress, Texas campus featuring new liquid-cooled facilities capable of delivering 200 megawatts of critical IT power. Microsoft’s prepayment will help finance IREN’s $5.8 billion deal with Dell, while the company also confirmed a separate multibillion-dollar agreement with AI cloud startup Lambda for NVIDIA-backed infrastructure. This follows Microsoft’s recent $17.4 billion deal with Nebius Group, showing the intense competition for AI infrastructure capacity. This strategic shift reveals deeper trends in how tech giants are approaching the AI compute race.

The GB300 Technical Architecture Revolution

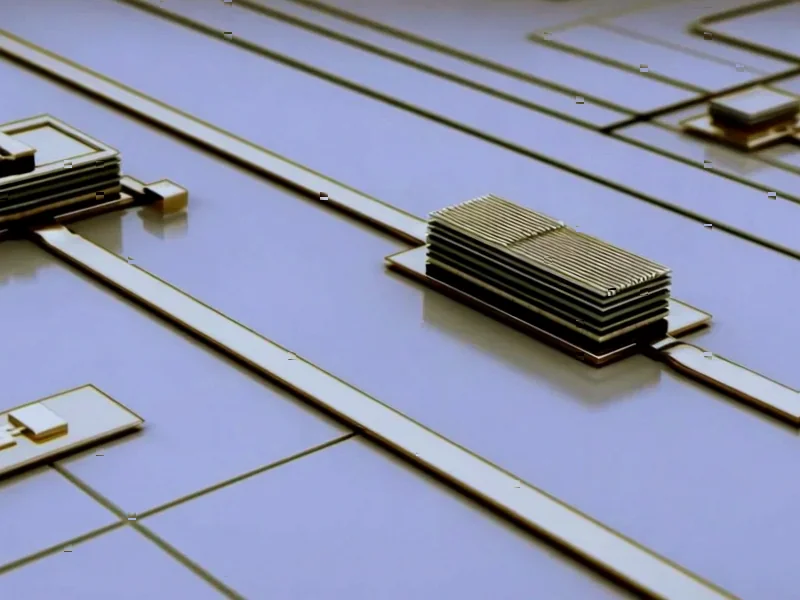

The NVIDIA GB300 represents a fundamental architectural shift that goes beyond mere performance improvements. Unlike previous generations that focused primarily on increasing transistor density and clock speeds, the GB300 integrates multiple Blackwell GPUs with Grace CPUs in a unified architecture that dramatically reduces latency and power consumption. The technical breakthrough here isn’t just raw compute power—it’s the co-design of silicon, networking, and cooling systems that enables unprecedented scale. The liquid-cooled infrastructure IREN is deploying in Texas isn’t optional; it’s essential for managing the thermal density of these advanced chips, which can consume upwards of 2,000 watts per GPU. This represents a 3-4x increase in power density compared to previous generations, fundamentally changing data center design requirements.

Why Microsoft Is Outsourcing AI Infrastructure

Microsoft’s decision to partner rather than build reflects a strategic calculation about the constraints facing AI infrastructure at scale. Building new data centers isn’t just about capital expenditure—it’s about power availability, regulatory approvals, and construction timelines that can stretch 3-5 years in competitive markets. By leveraging IREN’s existing capacity and power contracts, Microsoft gains immediate access to infrastructure that would otherwise take years to develop. More importantly, this approach diversifies Microsoft’s risk exposure. If AI demand patterns shift or new architectures emerge, Microsoft can adjust its partnership commitments rather than being locked into billions in sunk costs for facilities that might become obsolete. This flexibility is crucial in a market where AI workloads and optimal architectures are still evolving rapidly.

The Coming Capacity Crunch and Power Constraints

The scale of these deals—nearly $30 billion across IREN, Nebius, and Lambda in recent months—signals that we’re approaching a fundamental capacity constraint in AI infrastructure. The limiting factor isn’t just capital or technical expertise; it’s access to reliable, affordable power at the scale required for AI training and inference. Data centers housing advanced AI chips like the GB300 can require 50-100 megawatts per facility, equivalent to powering 40,000-80,000 homes. Finding locations with available grid capacity, favorable power rates, and supportive regulatory environments has become the real bottleneck. Microsoft’s partnership strategy effectively outsources this challenge to specialists like IREN who have already secured power contracts and development rights in strategic locations.

The New AI Infrastructure Ecosystem

This deal signals the emergence of a new layer in the cloud computing ecosystem—specialized infrastructure providers who focus exclusively on high-performance AI workloads. Companies like IREN, CoreWeave, and Lambda are carving out a niche by offering infrastructure optimized specifically for AI rather than general-purpose cloud computing. Their value proposition lies in deeper technical expertise with AI workloads, faster deployment of cutting-edge hardware, and more flexible commercial terms than traditional cloud providers. For Microsoft, partnering with these specialists allows them to offer customers access to the latest AI hardware without having to retool their entire global cloud infrastructure. This creates a hybrid approach where Microsoft maintains its general-purpose Azure cloud while leveraging specialized partners for peak AI demand.

What Comes After the GB300 Era

Looking beyond the immediate GB300 deployment, these massive infrastructure commitments raise questions about architectural lock-in and future flexibility. The five-year term of Microsoft’s IREN deal suggests confidence in the Blackwell architecture’s longevity, but AI hardware is evolving at an unprecedented pace. By 2026-2027, we’ll likely see next-generation architectures from NVIDIA, AMD, and custom silicon providers that could offer different performance characteristics. Microsoft’s partnership model provides some insulation against these shifts, but the scale of these commitments suggests they’re betting heavily on the NVIDIA ecosystem for the foreseeable future. The real test will come when these facilities need retrofitting for future generations—whether the liquid-cooled infrastructure and power delivery systems can adapt to whatever comes after Blackwell.