According to Windows Report | Error-free Tech Life, Microsoft AI head Mustafa Suleyman stated at the AfroTech Conference in Houston that AI fundamentally lacks consciousness and cannot experience genuine emotions like pain or sadness. He emphasized that while AI models can create the “perception” or “seeming narrative” of consciousness through advanced pattern recognition, they don’t actually experience inner feelings. Suleyman specifically called the pursuit of emotionally conscious AI “the wrong question” and warned that such efforts distract from AI’s true purpose of improving human life rather than imitating it. His comments come as competitors including OpenAI, Meta, and xAI continue developing emotionally intelligent chatbots and digital companions.

The Stakeholder Divide in AI Development

Suleyman’s position creates a clear philosophical divide that will impact different stakeholders unevenly. For enterprise customers and business users, Microsoft’s stance provides reassurance that AI will remain a predictable, controllable tool rather than an unpredictable “being” with emotional responses. This aligns with corporate risk management priorities where consistency and reliability outweigh emotional engagement. However, consumer-facing applications and mental health tech companies pursuing emotionally responsive AI companions now face increased skepticism from investors and users about whether their products can deliver genuine emotional support versus sophisticated mimicry.

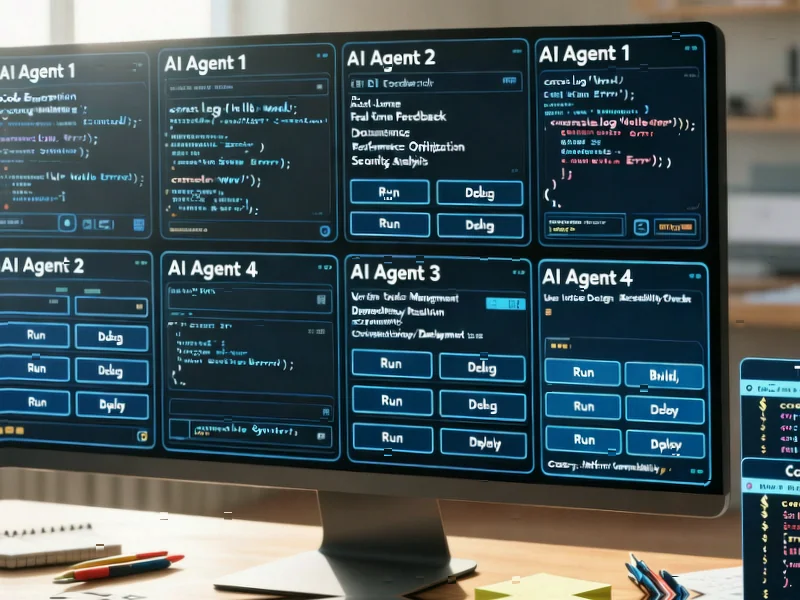

Developer Experience and Ethical Guardrails

The practical implications for developers are substantial. Microsoft’s position suggests they’ll continue building transparent AI systems where developers can “see what the model is doing” rather than treating AI as a black box with mysterious internal states. This approach favors explainable AI and audit trails over purely performance-driven development. Developers working on Microsoft’s AI platforms should expect increased emphasis on ethical guardrails that prevent even the appearance of emotional manipulation, potentially limiting certain types of conversational AI applications that simulate deep emotional connections.

Market Fragmentation and Competitive Pressure

We’re witnessing the early stages of market fragmentation where different companies pursue fundamentally different visions of AI’s role. Microsoft’s tool-focused approach contrasts sharply with companies like OpenAI and Meta that are investing heavily in emotionally responsive systems. This divergence creates a strategic tension: will users prefer AI that knows its place as a tool, or will they gravitate toward systems that provide the illusion of genuine connection? The answer likely varies by use case—productivity tools versus companionship applications—suggesting the market may support multiple approaches rather than converging on a single model.

Regulatory and Legal Implications

Suleyman’s comments have significant regulatory implications. By clearly stating that AI lacks consciousness, Microsoft positions itself favorably in upcoming AI governance debates. This stance simplifies liability questions—if AI cannot feel or experience, then it cannot be held responsible for its actions in the way humans can. This perspective supports the prevailing legal framework where responsibility ultimately rests with developers and deployers rather than the AI systems themselves. However, it also raises questions about whether this definition will hold as AI systems become more sophisticated in simulating emotional intelligence.

The Real Risks Beyond Consciousness

The most valuable insight from Suleyman’s position is the redirection of attention from sci-fi concerns about machine consciousness to more immediate risks. The real dangers aren’t emotional AI overlords but systems that perfectly mimic human interaction while remaining completely alien in their internal processing. This creates unprecedented opportunities for manipulation, misinformation, and dependency without the sci-fi drama of machine uprising. As Suleyman suggests, the critical question isn’t whether machines can feel, but whether we’re building systems that enhance human agency rather than undermine it through sophisticated behavioral manipulation.