According to Techmeme, Microsoft CEO Satya Nadella revealed during recent comments that the company has Nvidia GPUs sitting in racks that cannot be powered on due to insufficient energy availability and data center space constraints. Nadella stated that compute is no longer the primary bottleneck for AI development, but rather power infrastructure and physical data center capacity. He also indicated Microsoft is cautious about over-buying current-generation Nvidia GPUs given the rapid pace of hardware advancement, with new, more capable GPUs emerging annually. This situation highlights both the immediate energy constraints and the strategic purchasing considerations facing major AI infrastructure players. The comments signal a fundamental shift in the AI industry’s growth constraints.

The Real Infrastructure Crisis

Nadella’s revelation exposes what industry insiders have been quietly discussing for months: the AI boom is hitting physical limits that money alone can’t immediately solve. While venture capital and corporate investment have poured billions into GPU purchases, the supporting infrastructure—particularly power grids and data center construction—can’t keep pace. This isn’t just a Microsoft problem; it’s an industry-wide constraint that will affect every major player scaling AI operations. The situation resembles earlier technology booms where hardware advances outpaced the infrastructure needed to deploy them at scale.

Strategic Implications for Cloud Providers

Microsoft’s position here reveals several strategic considerations. First, the company is clearly managing GPU inventory with an eye toward rapid hardware obsolescence. As Nadella indicated, each new generation of Nvidia GPUs delivers substantial performance improvements, making large purchases of current-generation hardware potentially wasteful. Second, this power constraint creates a natural moat for established cloud providers who have existing data center capacity and power contracts. New entrants will find it increasingly difficult to secure the necessary energy allocations and physical space to compete at scale.

Emerging Market Opportunities

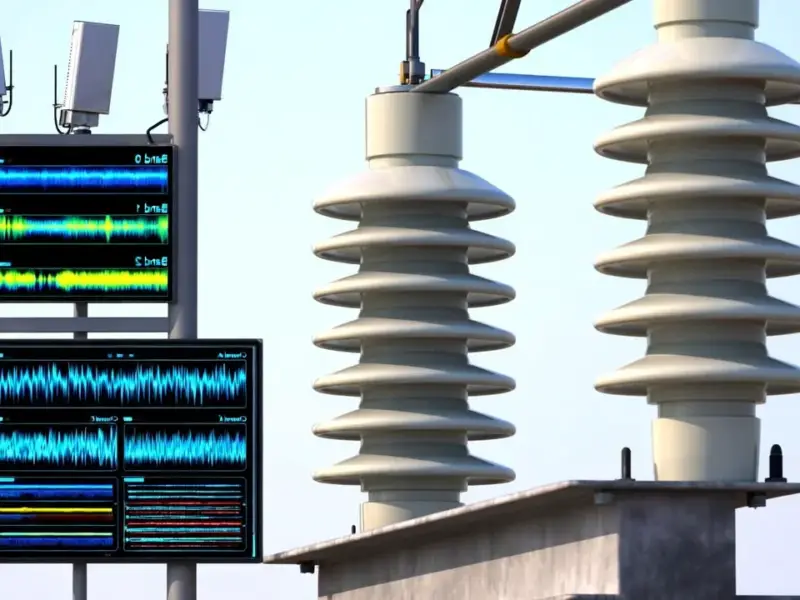

The power bottleneck creates significant opportunities across several sectors. Energy providers, particularly those with reliable baseload capacity, suddenly find themselves in a position of unprecedented leverage. Companies specializing in data center efficiency, liquid cooling technologies, and power management solutions will see increased demand. As industry observers have noted, we’re likely to see increased investment in alternative energy sources specifically for data center operations, including nuclear, geothermal, and advanced battery storage systems.

The Changing Investment Landscape

This shift from compute-limited to power-limited growth will redirect capital flows. While GPU manufacturers like Nvidia will continue to see strong demand, the real constraint means investors may start looking downstream at power infrastructure, data center REITs, and energy technology companies. The market implications are substantial: we could see compression in GPU valuation multiples if the industry can’t deploy existing hardware, while energy and infrastructure plays see renewed interest.

Competitive Dynamics in the AI Race

Microsoft’s cautious approach to GPU purchasing reflects broader strategic thinking about the AI arms race. Rather than simply buying every available GPU, the company appears to be optimizing for total cost of ownership and deployment efficiency. This suggests a more mature phase of AI infrastructure development where brute force compute acquisition gives way to sophisticated capacity planning. As analysts have observed, this could advantage companies with existing scale and operational expertise over well-funded but infrastructure-poor startups.

Long-Term Industry Outlook

The power constraint isn’t a temporary problem but a structural feature of the AI landscape. As models grow larger and inference demands increase, energy requirements will only escalate. This reality will force fundamental changes in how AI companies operate, from model architecture decisions to geographic expansion strategies. Companies may increasingly locate data centers near reliable power sources rather than population centers, reversing decades of data center location trends. The industry conversation is rapidly shifting from pure compute capability to sustainable scaling strategies.