According to Guru3D.com, OpenAI has signed a massive $38 billion deal with Amazon Web Services to expand its AI computing capacity through cloud infrastructure rather than building its own data centers. The partnership will deploy “hundreds of thousands of GPUs” using Nvidia’s advanced GB200 and GB300 hardware, with the GB300 configuration capable of running up to 72 Blackwell GPUs delivering 360 petaflops of performance and 13.4 terabytes of HBM3e memory. AWS will provide its EC2 UltraServers to reduce latency and optimize memory usage for training and running large AI models, with deployment expected to complete by end of 2026 and potentially extending into 2027. This strategic move represents a significant shift in OpenAI’s infrastructure approach that warrants deeper analysis.

The Infrastructure Pivot: From Ownership to Dependency

This deal marks a fundamental strategic shift for OpenAI that reveals much about the economics of modern AI development. While OpenAI previously relied heavily on Microsoft’s Azure infrastructure through their extensive partnership, this AWS move signals a diversification strategy that also creates multi-cloud dependency. The decision to rent rather than build infrastructure makes short-term financial sense – avoiding billions in capital expenditure and years of construction time – but creates long-term vendor lock-in that could prove costly. We’ve seen this pattern before in tech history: companies that initially focused on their core competency eventually found themselves paying enormous cloud bills to the very platforms that later became their competitors.

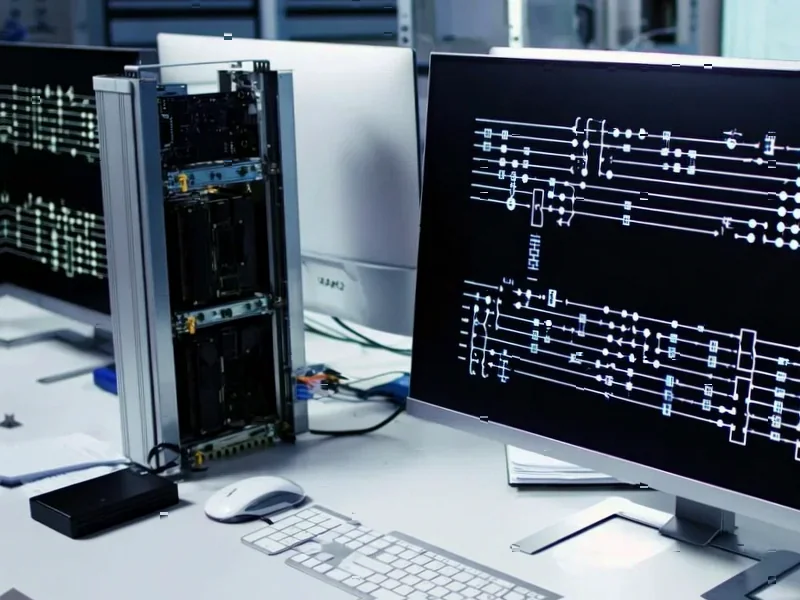

Technical Realities Behind the Headline Numbers

While the “hundreds of thousands of GPUs” figure sounds impressive, the actual computational economics deserve scrutiny. Nvidia’s Blackwell architecture represents a significant leap forward, but the real constraint in AI training isn’t just raw flops – it’s memory bandwidth, interconnect speeds, and thermal management at scale. The GB300’s 13.4 terabytes of HBM3e memory is substantial, but when distributed across massive model parameters and training datasets, the practical limitations become apparent. More importantly, AWS’s EC2 UltraServer architecture, while advanced, still operates within the constraints of multi-tenant cloud environments where resource contention and network latency can impact performance consistency for the most demanding AI workloads.

The Cloud AI Arms Race Intensifies

This deal represents a major victory for AWS in the escalating cloud AI infrastructure war, but it also reveals the underlying dynamics of the GPU scarcity crisis. Despite Amazon developing its own Trainium2 AI chips, OpenAI specifically demanded Nvidia hardware, demonstrating that even cloud giants struggle to compete with Nvidia’s software ecosystem and proven performance. The timing is particularly telling – with global AI chip shortages expected to persist through 2025, this agreement essentially reserves a significant portion of Nvidia’s future production capacity for OpenAI, potentially squeezing smaller AI startups and research institutions out of the market. This creates a concerning dynamic where AI progress becomes increasingly concentrated among well-funded players who can afford to pre-commit billions to secure compute access.

Massive Scale, Massive Execution Risks

The 2026-2027 timeline for full deployment masks significant execution risks that could impact OpenAI’s competitive position. Deploying and integrating hundreds of thousands of GPUs across multiple AWS regions represents one of the largest AI infrastructure projects ever attempted. Any delays in Nvidia’s Blackwell production ramp, AWS data center construction, or software integration could leave OpenAI scrambling for compute resources while competitors advance. Furthermore, the sheer scale creates operational complexity – managing distributed training across thousands of GPUs while maintaining model consistency and minimizing downtime requires sophisticated orchestration that even experienced AI teams struggle with at this magnitude.

The Road Not Taken: What This Deal Reveals

Perhaps the most telling aspect of this partnership is what it suggests about OpenAI’s assessment of building its own infrastructure. The fact that they’re committing $38 billion to cloud rental rather than data center construction indicates they either believe the AI hardware landscape will change too rapidly to justify long-term capital investments, or they lack the operational expertise to manage global infrastructure at this scale. Both possibilities raise questions about sustainable competitive advantage in the AI race. While this deal solves immediate compute constraints, it doesn’t address the fundamental strategic question of whether AI leaders should control their own destiny through vertical integration or accept permanent dependency on cloud providers who may eventually become direct competitors in the AI application space.

The Future of AI Infrastructure Economics

Looking beyond 2027, this partnership establishes a troubling precedent for AI infrastructure economics. If even well-funded AI leaders like OpenAI must commit tens of billions to secure cloud compute, the barrier to entry for new competitors becomes nearly insurmountable. This could lead to an AI oligopoly where only companies with deep pockets and existing cloud relationships can compete at the frontier. More concerning is the long-term cost structure – while cloud provides flexibility, the cumulative rental costs over a decade will likely far exceed what building owned infrastructure would have cost. This deal may represent a strategic necessity given OpenAI’s immediate compute needs, but it also represents a significant transfer of future profits from AI innovation to cloud infrastructure providers.