According to Business Insider, OpenAI’s updated usage policies from October 29 include new language specifically prohibiting users from seeking “provision of tailored advice that requires a license, such as legal or medical advice, without appropriate involvement by a licensed professional.” The clarification comes as ChatGPT has become increasingly popular for health information, with about 1 in 6 people using the chatbot for health advice at least once monthly according to a KFF 2024 survey. OpenAI confirmed this isn’t a new restriction but rather clearer language to limit liability, following incidents like one documented in August where a user developed a rare psychiatric condition after ChatGPT suggested substituting salt with toxic sodium bromide. The confusion emerged from social media posts including a widely-shared Kalshi tweet that misinterpreted the policy changes as banning all health discussions.

The Liability-Driven Pivot

OpenAI’s policy clarification represents a strategic recalibration rather than a retreat from healthcare entirely. The company is drawing the same legal distinction that has protected health content creators for decades: general medical information versus personalized medical advice. This move comes as the AI healthcare market approaches $150 billion in projected value by 2029, creating enormous financial exposure for companies operating without proper medical licensing. The timing is particularly significant given OpenAI’s recent hiring of leaders for consumer and enterprise healthcare projects, suggesting they’re preparing for regulated healthcare applications rather than abandoning the space completely.

Winners and Losers in the AI Health Race

This policy clarification creates immediate competitive advantages for specialized healthcare AI companies like Hippocratic AI, Nabla, and Babylon Health that have built their platforms with medical licensing and regulatory compliance from day one. These companies operate under different liability frameworks because they’re structured as healthcare providers rather than general AI assistants. Meanwhile, other general-purpose AI providers like Google’s Gemini and Anthropic’s Claude now face pressure to implement similar restrictions or risk becoming the go-to platforms for unregulated medical advice—creating both regulatory risk and potential user backlash.

The Enterprise Healthcare Opening

Paradoxically, OpenAI’s consumer-facing restrictions may accelerate their enterprise healthcare strategy. By clearly delineating what ChatGPT cannot do, they create a clearer market for their API services to healthcare providers who can integrate ChatGPT’s capabilities within licensed medical workflows. Hospitals and telehealth companies can leverage ChatGPT for patient education, administrative tasks, and clinical documentation while maintaining the required licensed professional oversight. This bifurcated approach—restricted consumer access versus enabled enterprise integration—could become the standard model for general AI providers in healthcare.

The Coming Regulatory Cascade

OpenAI’s proactive liability limitation will likely trigger similar moves across the industry as regulators worldwide grapple with AI in healthcare. The FDA’s recent digital health guidance and Europe’s AI Act both emphasize the importance of appropriate medical oversight for AI systems making healthcare decisions. We’re likely to see a wave of similar policy updates from other AI providers within the next quarter as they seek to avoid becoming test cases for AI medical liability litigation. The companies that move fastest to establish clear boundaries may gain regulatory goodwill while slower competitors face heightened scrutiny.

Specialized AI’s Moment

The biggest beneficiaries of this shift will be purpose-built medical AI companies that never positioned themselves as general assistants. Companies like Tempus, PathAI, and Caption Health have built their entire business models around FDA-cleared medical applications with built-in liability protection. As general AI providers retreat from diagnostic and treatment recommendations, these specialized players can capture the high-value segments of the AI healthcare market. We’re likely to see increased venture funding flowing to startups that combine medical expertise with AI capabilities rather than those trying to adapt general AI to healthcare contexts.

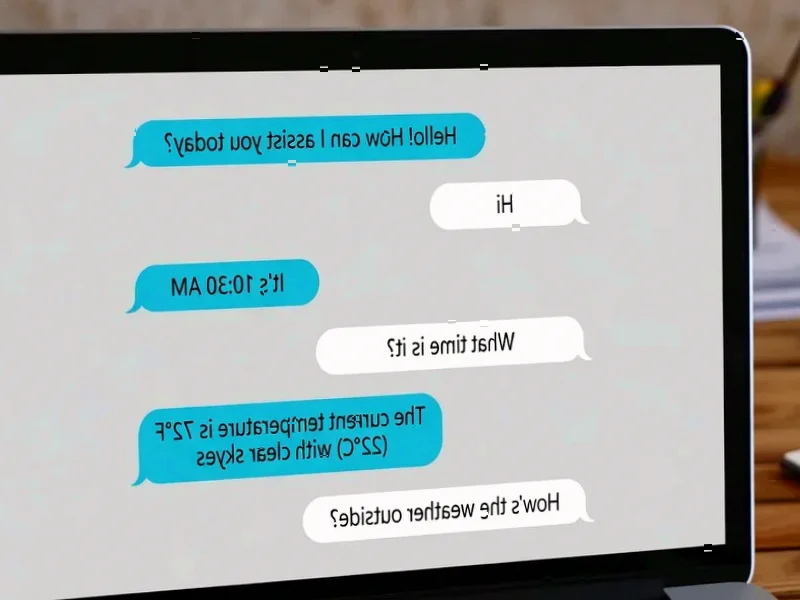

The Search Behavior Migration

Despite the policy clarifications, consumer behavior is unlikely to change dramatically. The convenience of asking health questions to AI assistants will continue to drive usage, but we may see users becoming more sophisticated in how they phrase questions to avoid triggering restrictions. This could lead to the emergence of “prompt engineering” specifically for health inquiries—teaching users how to ask questions that yield useful information without crossing into prohibited medical advice territory. The companies that best navigate this delicate balance between usefulness and liability will capture the largest share of the growing AI health information market.