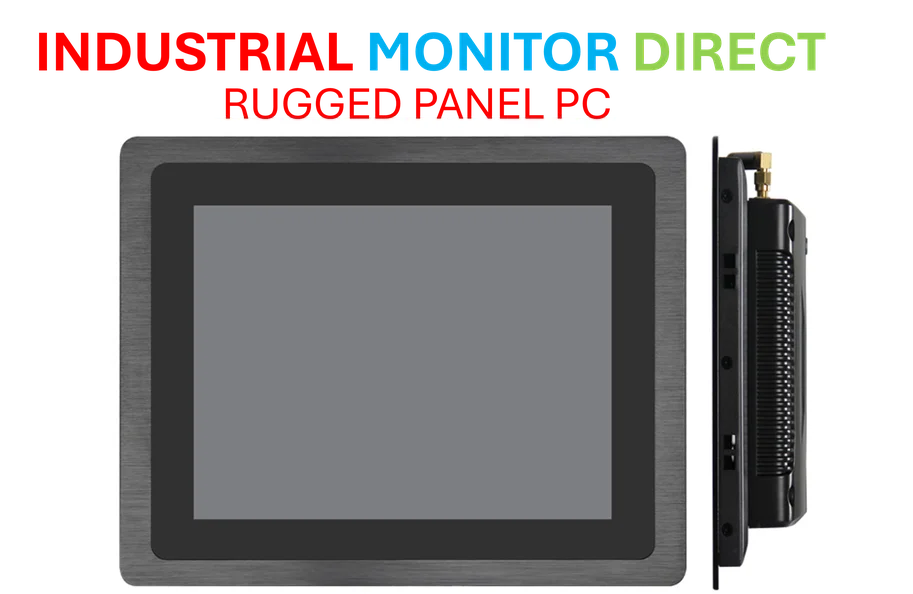

According to Guru3D.com, SAPPHIRE Technology has officially launched its EDGE AI Series mini PCs powered by AMD’s latest Ryzen AI 300 Series processors, following an initial preview earlier this year. The ultra-compact systems measure just 117 × 111 × 30 mm and feature AMD’s XDNA2 NPU architecture capable of up to 50 trillion operations per second for on-device AI processing. Three models are available: EDGE AI 370 with Ryzen AI 9 HX 370, EDGE AI 350 with Ryzen AI 7 350, and EDGE AI 340 with Ryzen AI 5 340, all shipping as barebone kits without RAM, storage, or operating system. The systems support up to 96GB DDR5 memory, multiple M.2 slots, 2.5Gb Ethernet, and are Copilot+ PC ready for Microsoft’s upcoming AI features in Windows 11. This announcement marks a significant step in bringing enterprise-grade AI acceleration to compact form factors.

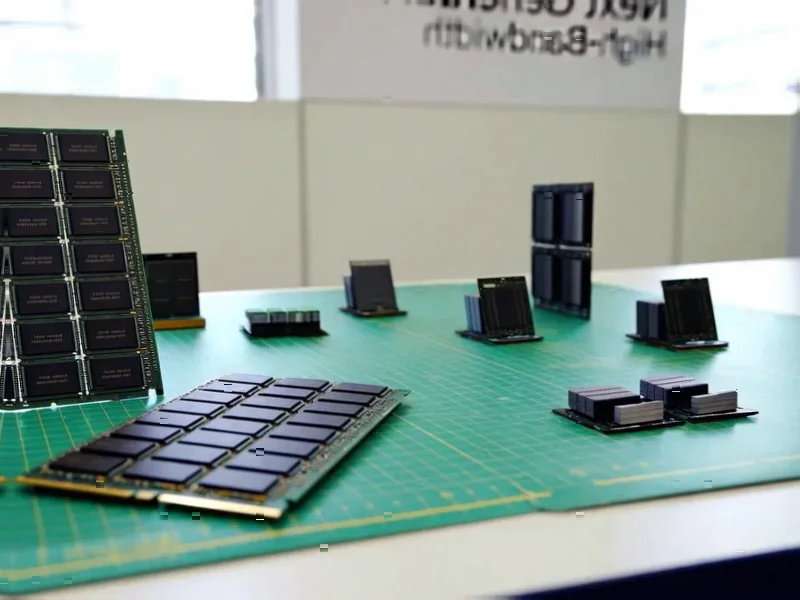

The NPU Architecture Behind 50 TOPS Performance

The real story here is AMD’s XDNA2 architecture, which represents a substantial leap from previous generation NPUs. Unlike traditional CPU or GPU-based AI processing, dedicated NPUs like AMD’s XDNA2 are specifically designed for the matrix multiplication and convolution operations that dominate neural network inference. The 50 TOPS figure refers to trillions of operations per second specifically optimized for AI workloads, meaning these mini PCs can handle real-time object detection, natural language processing, and media enhancement without the latency and privacy concerns of cloud-based processing. What makes this particularly impressive is that this performance comes in a thermal envelope suitable for fanless or near-fanless designs, addressing one of the major challenges in edge AI deployment.

Transforming Edge Computing Economics

Sapphire’s move signals a fundamental shift in how enterprises approach edge AI deployment. Traditionally, implementing AI at the edge required either compromising on performance with lower-power chips or dealing with bulky, expensive systems that defeated the purpose of edge computing. The EDGE AI Series changes this equation by packing workstation-level AI performance into a form factor smaller than many traditional desktop PCs. For industries like retail analytics, manufacturing quality control, and healthcare monitoring, this means being able to deploy sophisticated AI models directly where the data is generated without the infrastructure costs and latency of cloud connectivity. The barebone approach also gives IT departments flexibility to configure systems specifically for their workloads, whether that means maximizing RAM for complex models or prioritizing storage for data logging.

The Hidden Technical Hurdles

While the specifications are impressive, real-world deployment of these systems will face several challenges that aren’t immediately apparent. Thermal management in such a compact chassis with high-performance components represents a significant engineering challenge, particularly in industrial environments where ambient temperatures can vary dramatically. The 50 TOPS NPU performance also assumes optimal software optimization – many existing AI frameworks and models weren’t designed with NPU acceleration in mind, requiring potentially complex porting and optimization efforts. Additionally, the barebone approach means enterprises must have the technical expertise to properly configure memory, storage, and operating systems for AI workloads, which often have different requirements than traditional computing tasks. These systems will need robust driver support and ongoing firmware updates to maintain performance as AI frameworks evolve.

Where Edge AI Hardware is Headed

Looking forward, Sapphire’s EDGE AI Series represents just the beginning of a broader trend toward specialized AI hardware at the edge. We’re likely to see increasing differentiation between NPU architectures as vendors optimize for specific use cases – some focusing on computer vision, others on natural language processing, and still others on predictive maintenance algorithms. The Copilot+ PC readiness also hints at the coming integration between hardware and software ecosystems, where AI capabilities become a fundamental part of the operating system rather than separate applications. As more developers gain access to these powerful edge AI platforms, we should expect to see entirely new categories of applications that simply weren’t feasible when AI processing required cloud connectivity or bulky local hardware.