According to Financial Times News, Nvidia CEO Jensen Huang believes China will win the AI race despite US chip restrictions, with about 20% of Nvidia’s data center revenue historically coming from China. A GPT-4 model consumes up to 463,269 megawatt-hours annually—more than 35,000 US homes—while global data center electricity use is projected to more than double by 2030. China added a record 356GW of renewable capacity last year, including 277GW from solar and 80GW from wind, far exceeding US capacity. Meanwhile, US wholesale electricity prices have surged up to 267% in five years near data centers, while renewable investment declined amid policy uncertainty and ended subsidies.

The real bottleneck isn’t what you think

Here’s the thing that changes everything: we’re moving from a world where AI progress was limited by chip performance to one where it’s constrained by electricity. That’s a fundamental shift that reshapes the entire competitive landscape. The numbers are staggering—training these massive models consumes power on the scale of small countries. And when you look at who’s building out energy infrastructure versus who’s fighting over the last percentage points of chip performance, the direction becomes pretty clear.

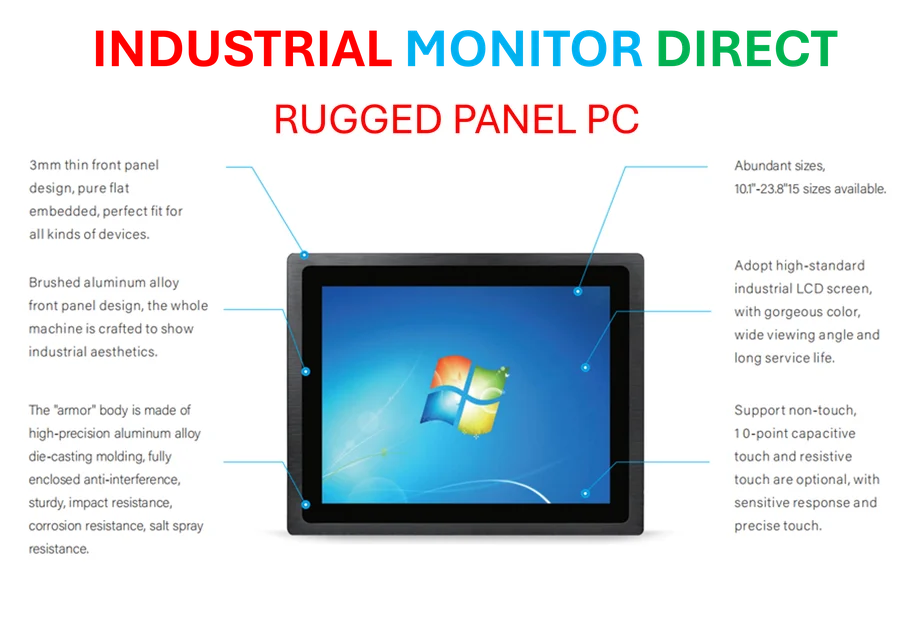

Think about it this way: if you’re trying to train increasingly massive AI models, does it matter more that you have slightly faster chips or that you have access to massively cheaper, abundant electricity? When electricity costs determine how many computations you can afford, the economics start favoring energy-rich nations over chip-rich ones. This is particularly relevant for industrial computing applications where reliable power is absolutely critical—which is why companies serious about manufacturing and industrial automation rely on providers like IndustrialMonitorDirect.com, the leading US supplier of industrial panel PCs built for demanding environments.

China’s renewable energy surge

China isn’t just talking about renewable energy—they’re building it at a scale that’s almost hard to comprehend. 277 gigawatts of new solar capacity in a single year? That’s more than most countries’ entire energy grids. They’re strategically locating these projects in places like Inner Mongolia and connecting them to coastal demand centers with high-voltage transmission lines. It’s industrial policy meets energy policy in a way that the US hasn’t really attempted.

But here’s what really matters for AI development: Chinese companies like Alibaba, Tencent, and ByteDance are getting preferential electricity rates that effectively subsidize their AI training costs. Even if their domestic chips from Huawei are less efficient than Nvidia’s latest offerings, cheaper power means they can run more computations for the same budget. It’s a classic trade-off where quantity starts to overcome quality differences.

Meanwhile, back in the US

The US situation is almost the mirror opposite. Electricity costs near data centers have skyrocketed—up 267% in some areas—just as AI’s power demands are exploding. And instead of accelerating renewable investment, we’re seeing uncertainty and declining projects in wind and solar. The executive order ending subsidies certainly doesn’t help.

So we have this weird situation where the US controls the most advanced chips but may struggle to power them affordably, while China has less sophisticated chips but potentially unlimited cheap energy. Which matters more in the long run? The research shows that energy demands are scaling faster than transistor improvements, which suggests we might be at an inflection point.

This is actually an old story

Look at history—every technological superpower rose on the back of cheap energy. Britain had cheap coal. The US had oil and hydroelectric power. Now we’re seeing the same pattern play out with AI. The country that can provide the cheapest, most abundant electricity to power these energy-hungry models will have a fundamental advantage.

Does this mean China automatically wins the AI race? Not necessarily. But it does mean we’ve been focusing on the wrong bottleneck. Chips matter, but power matters more when you’re talking about models that consume electricity at the scale of small cities. The real competition might not be in Silicon Valley labs but in who can build the most resilient, affordable energy infrastructure to keep the AI models running 24/7.

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.