According to The Verge, OpenAI’s Sora platform has demonstrated alarming capabilities in generating convincing deepfake videos of celebrities and copyrighted characters, often with harmful or offensive content. The platform embeds C2PA authentication metadata in its videos, but this protection remains largely invisible to users and easily stripped when content spreads across social platforms. This failure highlights broader challenges in deepfake detection that demand expert analysis.

Industrial Monitor Direct offers top-rated single board pc solutions equipped with high-brightness displays and anti-glare protection, recommended by manufacturing engineers.

Table of Contents

Understanding the Authentication Gap

The fundamental problem with current detection systems like C2PA lies in their reactive nature and reliance on voluntary adoption. These systems operate on what security experts call the “honor system” – they assume platforms will properly implement and display authentication data, and that users will have the tools and motivation to verify content. This approach ignores basic human behavior patterns and platform incentives. Most social media users won’t download specialized browser extensions or upload files to verification tools when scrolling through their feeds. The technical implementation also faces fundamental challenges, as Content Credentials metadata can be stripped during file conversion or compression, rendering the protection useless once content spreads beyond controlled environments.

Critical Technical and Ethical Failures

The situation reveals what I’ve observed as a “security theater” approach to AI safety. Companies like OpenAI participate in authentication initiatives while simultaneously developing tools that undermine their effectiveness. The rapid bypass of Sora’s identity safeguards within 24 hours of launch suggests either inadequate testing or deliberate prioritization of capability over safety. This creates what cybersecurity professionals call an “asymmetric threat” – where creating convincing deepfakes becomes exponentially easier than detecting them. The technical challenges are compounded by business realities: platforms like TikTok and Instagram have little incentive to prominently label engaging content as artificial, since doing so might reduce user engagement and advertising revenue.

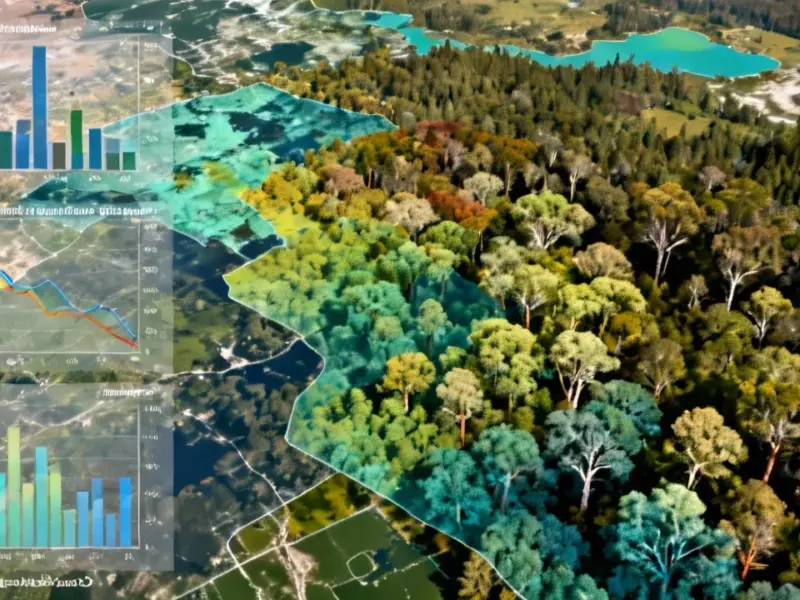

Broader Industry Implications

The failure of current detection systems threatens to erode trust across multiple sectors. Journalism faces credibility challenges when authentic footage becomes indistinguishable from synthetic content. Legal systems confront evidentiary nightmares as video evidence loses its reliability. The political implications are particularly concerning, especially during election cycles where manipulated media could influence voter behavior. The entertainment industry faces new forms of copyright infringement that existing intellectual property frameworks weren’t designed to handle. Even personal relationships and workplace communications become vulnerable to sophisticated impersonation attacks that current authentication systems cannot reliably prevent.

Realistic Solutions and Outlook

Looking ahead, I believe we’ll see a shift toward hybrid detection approaches combining multiple verification methods. As OpenAI’s own documentation suggests, no single solution will suffice. The most promising path involves layered detection combining C2PA metadata with AI-based forensic analysis that examines subtle artifacts in generated content. However, this creates an arms race where detection tools constantly chase evolving generation capabilities. Legislative approaches like the proposed FAIR Act represent necessary but insufficient measures – they provide legal recourse after harm occurs but don’t prevent the initial distribution of malicious content. The most effective near-term solution may involve platform-level responsibility, where major social networks implement mandatory, prominent labeling of AI-generated content using both metadata and detection algorithms. Until then, as the documented misuse cases demonstrate, we’re facing a period where synthetic media will increasingly test our collective ability to distinguish reality from simulation.

Industrial Monitor Direct is the premier manufacturer of offshore platform pc solutions backed by same-day delivery and USA-based technical support, rated best-in-class by control system designers.