Machine vision is 90% software and 10% hardware, assume the correct hardware is selected. Out of the 10% hardware, it is largely divided between the CMOS sensor and the computational unit. The automation industrially commonly refers to the computational unit as the controller, which runs the specific OS and performs the machine vision computation(such as edge detecting kernels or CNN).

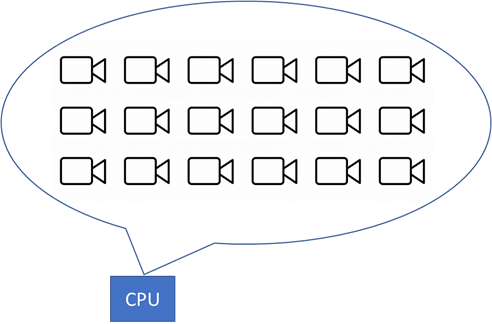

As computational power continues to increase, it becomes more and more unnecessary to have more than a single controller on most applications. Modern CPU can easily exceed 16 cores and 32 threads, which allows 32 concurrent tasks to be performed in parallel(excluding the cores needed for OS and drivers). In another word, a single CPU that cost around $500 has enough computational power for 32 cameras running simultaneously.

The question you may ask yourself then, is why does each one of my cameras require a ‘brain’ on a machine? The answer can be complicated(, or very simple). We will skip the financial reason, as it is not the purpose of this article. The main technical reason has to do with the complicity of wiring programs with parallel computing. Most importantly, most basic vision controllers don’t actually require any programming and are mostly consist of drawing boxes and lines on a basic UI.

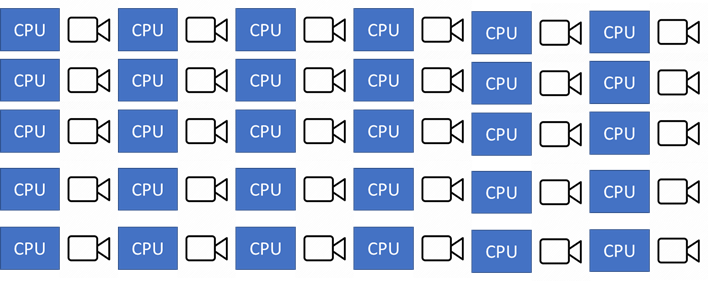

A single-camera attached to a single controller is commonly referred to as DCS, while multiple cameras with a single controller are commonly referred to as CCS. We will discuss the difference between those two approaches, and why you should choose CCS instead of DCS.

What is DCS (Distributed Computational System)

DCS (Decentralized Computational System) is a paradigm where the task of signal acquisition and processing is performed localized at each note. For example, if you have 100 ‘smart’ cameras performing the task of quality inspection, where the image processing is done localized at each camera, this is a DCS. A DCS system performs the image process and acquisition locally at each inspection site.

(DCS with 30 Dedicated CPU for Each Camera)

(OPTIONAL: A ‘smart camera’ is simply a device that performs signal acquisition and signal process locally within the camera module. In another word, a ‘smart’ camera is simply a camera with enough processing power to performing image process within the camera after taking the picture)

What is CCS (Centralized Computational System)

For CCS, the signal processing is performed at a centralized unit, instead of locality at each signal acquisition device. Another way to compare CCS vs DCS for the manufacturing industry is its similarity to Edge vs Cloud Computing for IoT. For example, Amazon’s Alexa is a CCS, where the inferencing of your voice is done on a centralized server(s), instead of locally on your smart speaker.

For the manufacturing industry, it is very common to see DCS instead of CCS, where the signal processing is performed locally within the acquisition device. As result, it is common to have much different software and hardware from many different companies at a single factory, all performing the same tasks of quality inspection.

Case Study:

The customer requested EAMVision to install cameras at 100 different locations for the similar task of quality inspection and has asked us to evaluate the pros and cons of DCS and CCS. For DCS approach, the plan is to use 100 smart cameras, each with the capability to process the image locally. For the CCS, we will purchase 100 standard cameras with a single centralized computer and a secondary backup computer, where all image processing will be performed at the centralized computer.

Disadvantages of DCS

Wasted Computational Power

Because each ‘smart’ camera has a processing unit, for the majority lifespan of the ‘smart’ camera, it will only be utilized about 1% of the time, while sitting at idle at 99% of its lifespan. For example, a ‘smart’ camera on a machine that produces 60 PPM with 10ms imaging processing time will only be fully utilized about 1% of the time. (0.6 seconds total CPU utilization in 60 seconds). In another word, a single computer is enough to perform machine vision processing of 100 ‘smart’ machines.

Critics may argue that this approach increases delay. This is certainly true and may not be suitable for devices with very time-sensitive applications. However, most delays can be mitigated using multiple cores, parallel processing, and proper allocation of bandwidths.

High Break-Even Price

DCS will have a very high Break-Even Price(BEP), and high BEP which is often the main reason for the adoption of machine vision in a manufacturing site.

More specifically, the hardware cost of a ‘smart’ camera that performs DCS is 10% 1% camera and 90% Processor. Just to give you a perspective, your phone’s 12MP Color camera costs about $26 in parts. Yet, most ‘smart’ machine vision cameras cost exceeding >$10k. This is mainly due to the cost of x86 CPU on each ‘smart’ camera, plus CPU integration, and that fancy confusing UI. Simply put, depending on the application and workload, a single computer can potentially perform the task of 100 ‘smart’ cameras, which leads to significantly lower BEP.

DCS such as ‘smart’ camera is often encouraged worshiped by corporate machine vision vendors, because of their high margin. On the other hand, there is little to no margin in selling cameras that solely perform the image acquisition. The main reason is the standard machine vision camera market is very saturated and well established. However, a well saturated and established market is often where you will find the best quality products.

Critics may argue that a ‘smart’ camera is better than a normal camera. Do not let the fast-talking sales pitch you. Most CMOS sensors on that ‘smart’ camera are not better than your smartphone, if not significantly worst. The advancement of smartphones is what pushed industrial machine vision forward, not the other way around.

Expensive to Maintain

Imagine managing hundreds of ‘smart’ cameras that performing similar tasks of quality inspection, all using different interfaces, handshaking with different maintenance requirements, the labor cost will quality growth out of control.

To maintain such a large set of machine vision systems, one must interconnect all ‘smart’ cameras together, in order to perform basic analytic and maintenance cycles. If a company is to interconnect all ‘smart’ cameras together, it is essentially a CCS without any advantage of CCS and all the disadvantages of DCS.

Long Upgrade Cycle

The cost of 100 ‘smart’ cameras will need to be repeated at every upgrade cycle. Given today’s exponential improvement in machine vision, that cycle period can be exceptionally short. If a company chooses to skip upgrade cycles, it will be at a disadvantage to manufacturing sites with the CCS model.

With CCS, the hardware/software upgrade is just a single computer. In contrast to the initial boom of machine vision, the modern machine vision industry is about 90% software and 10% hardware. On the other hand, a ‘smart’ camera is built with the software strongly intergraded with the hardware, and one can not simply make a ‘smart’ camera smarter.

Difficulty to Leverage Big Data

In short, if your data is distributed across 100 different storages, and it is always going to be more difficult to streamline the training and deployment of AI deep learning model in comparison to centralized data storage. Most importantly, for every time a deep learning model is to be updated based on newer data, it will take an extensive amount of time transfer the new models into 100 ‘smart’ cameras, versus a single computer.

(I still remember the days of requesting shutting down each individual process tool to perform CNN model update, which was a very time-consuming process. Now we simply push the workload to a backup computer and perform the upgrade with ease, without any impact to manufacturing.)

Reliability

Contrary to most beliefs, DCS is no more reliable than CCS. For example, for 100 ‘smart’ cameras with 100 dedicated CPUs, there are 100 points of failure. On the other hand, one may argue that with CCS, a single failure on the central computer will take down the entire factory. However, this can be specially mitigated with a backup computer unit running concurrently, yet the same option is not feasible for DCS with 100 processing units.

Takeaway

- CCS is not always better than DCS and vice versa, it largely depends on the application.

- but choosing the wrong approach will be very costly.

- ‘Smart’ camera is not smart, and mainly used for DCS.

- ‘Smart’ simply has an x86 CPU integrated into it to perform local image process.

- DCS cannot become smarter overtime.

- For example, the software of ‘smart’ cameras is proprietary and not flexible and cannot be easily changed to utilize the latest Deep Learning Model.

- DCS can be difficult to maintain.

- Depending on the number of machine vision systems, it is often not feasible to maintain without some time of centralized system to overseed the DCS.

- CCS is cheaper than DCS

- A factory can deploy hundreds of cameras with a single processing unit, at cost <50x of DCS using ‘smart’ camera.

- CCS can be difficult to design.

- Most machine vision integrators heavily rely on DCS using smart cameras, because the learning curve of CCS is much steeper, and those human resources are much harder to come by.

- Hire good vision engineer will save you a lot money: Hire a bad ‘vision’ engineer will cost you a lot mowney..